When building time series forecasting models such as ARIMA (Autoregressive Integrated Moving Average), model selection is paramount in order to get reliable forecasts. Two most reliable measures for model selection are the Akaike Information Criterion (AIC) and the Bayesian Information Criterion (BIC). These two metrics allow the analysts to achieve a trade-off between model complexity and performance, allowing them to select the optimal model for their data.

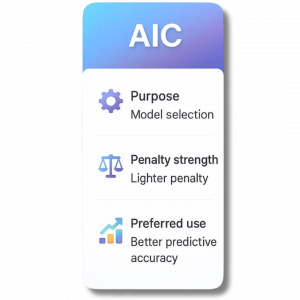

What is AIC?

Akaike Information Criterion, or AIC, is a statistical measure used to evaluate and compare models. It was developed by Hirotugu Akaike and provides a way of selecting an optimal model with the optimal explanation of the data without overfitting.

AIC does this by balancing the model’s accuracy to the data with the number of parameters it uses. While it is possible to use a model with more parameters to fit the data better, it may also become overcomplexified. AIC is preferred over models with more than necessary parameters so that accuracy is traded off against simplicity.

A lower AIC is better for a model. However, AIC is most useful when comparing one model to another, rather than as an absolute measure.

What is BIC?

The Bayesian Information Criterion, or BIC, is also a model that is intended to perform the same activities as AIC but with a Bayesian perspective. BIC, like AIC, estimates model fit and penalizes complexity. But while AIC penalizes complexity less for larger sample sizes, BIC penalizes complexity increasingly for larger sample sizes.

Because of this greater penalty, BIC will prefer simpler models to AIC. The model having the lower BIC value is preferred, particularly when the objective is to identify the true underlying structure of the data rather than to simply achieve the best prediction.

Key differences between AIC and BIC

Although AIC and BIC aim to guide model choice, they disagree regarding penalty on model complexity. AIC is less stringent and demands higher parameter models. It is hence the choice when prediction is primarily desired. BIC has a harsher penalty on complexity and is hence suitable to find the most parsimonious model that can still fit the data adequately.

Application of AIC and BIC in ARIMA models

ARIMA models are commonly used for time series forecasting and are uniquely defined by three parameters: autoregression (p), differencing (d), and moving average (q). During the construction of ARIMA models, the correct combination of the above parameters is most important.

In this way, analysts try to fit multiple ARIMA models with different estimates for parameters. AIC and BIC for each of these models are calculated. The best option is selected as the model with minimum AIC or BIC.

This process guarantees that the chosen ARIMA model not only adequately describes the past data but also doesn’t have unnecessary complexity. Any standard package facilitates this process by automatically attempting various combinations of ARIMA parameters based on AIC or BIC.

When to use AIC or BIC

This choice will rest with your goal of analysis. If your primary focus is on the best forecasting performance, AIC generally is your best option. If your primary focus is to determine the actual structure of data or to construct a more interpretable model, BIC would be preferred since it imposes a higher penalty for complexity.

In practice, both AIC and BIC are usually calculated and considered together prior to making a choice on the model.

Conclusion

AIC and BIC are two key tools for selecting the best model in time series forecasting. They are especially important when working with ARIMA models. These criteria help analysts balance goodness-of-fit with model complexity. The goal is to choose a model that is both effective and efficient. Whether you need accurate forecasts or clear model interpretation, understanding AIC and BIC is essential. Applying them can greatly improve the quality of your time series analysis.